According to PGIM, the global data sphere is expected to grow to 2100 zettabytes by 2035.

Splunk is a data platform designed to handle large amounts of data for large organizations. There are a few questions we have to answer first:

- How large is the amount of data?

- Are we not capable of doing without any software at all?

- What does Splunk really do?

Everyone has the same or similar questions in their mind. The answer is That Organizations usually handle billions or trillions of data, which makes it difficult for us to maintain and analyze. Here, software like Splunk comes into the picture.

Splunk excels at handling unstructured, semi-structured, and structured data without any issues. It collects, indexes, and enables users to search and analyze vast volumes of logs and event data.

This software is compatible with various sectors, including cybersecurity, data science, machine learning, artificial intelligence, finance, and telecommunications.

Example:

- A big retailer uses Splunk to monitor millions of transactions daily.

- To identify fraudulent activity and optimize inventory management through analysis of point-of-sale systems, consumer behavior, and supply chain processes.

- Splunk enables the store to make data-driven choices, boost security, and increase operational efficiency.

We’ll learn more about how Splunk can help with cybersecurity on this website.

Common Use Cases:

There are multiple use cases, but we will focus on the everyday use case.

- Monitoring: It is used to monitor infrastructure, applications, and networks, helping teams to spot issues before they escalate.

- Security: It is used to detect threats and support fast incident response.

- Analytics: It delivers operational intelligence and business insights by turning raw data into meaningful metrics.

History

Splunk was established in the early 2000s by Eric Swan, Rob Das, and Michael Baum. They wanted to create a way to easily organize and retrieve data from a big database. The solution relied on a strong search engine to examine and store log data within the system infrastructure. It has been over 25 years since they entered the market, and they remain in demand. In 2014, Cisco and Splunk formed a partnership.

Product Categories

Splunk offers three product categories:

- Splunk Enterprise is utilized by businesses with substantial IT infrastructures and IT-driven operations. This use various sources, including websites, applications, devices, and sensors.

- Splunk Cloud is a cloud-hosted platform with similar functionality as the corporate edition. It is available from Splunk itself or through the AWS cloud platform.

- Splunk Light enables real-time search, reporting, and alerting on log data from a single source. This is light package so it has limited functionality and capabilities in comparison to the other two versions.

Advantages

There are a few advantages that make this tool one of the best for us:

- Real-Time Data Processing

Splunk can analyze data as soon as it’s uploaded. This means you can monitor systems, detect threats, and respond to incidents instantly before they escalate.

- Scalability

Whether you’re handling gigabytes or petabytes of data, Splunk scales effortlessly. Its distributed architecture allows you to expand your environment as your data volume and organizational needs grow.

- User-Friendly Interface

- You can create reports, alerts, and searches with minimal training.

- Splunk is accessible to both technical and non-technical users with beginner-friendly dashboards, powerful visualizations, and a search processing language (SPL).

- Comprehensive Ecosystem

- Splunk supports hundreds of data sources and integrates with cloud platforms, third-party tools, and enterprise systems.

- The Splunkbase app marketplace further enhances functionality with ready-to-use plugins.

- Flexibility

- From security operations and IT monitoring to business intelligence, Splunk adapts to nearly any use case.

- It supports on-prem, cloud, and hybrid deployments—making it ideal for diverse environments.

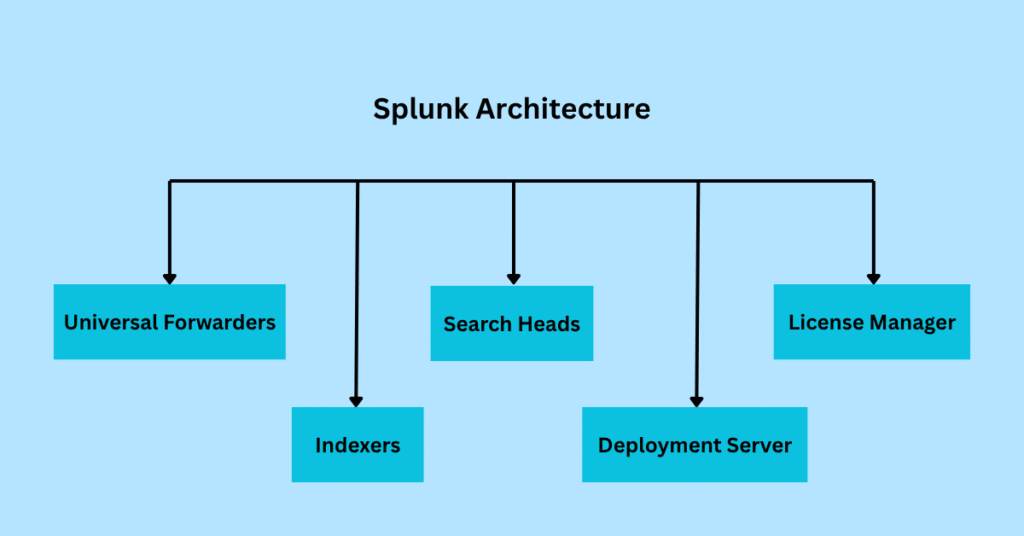

Splunk Architecture

Splunk’s architecture plays a crucial role in ensuring the CIA Triad is fulfilled. Let’s see the several key components, each playing a distinct role:

- Universal Forwarders (UF): A Universal Forwarder is a lightweight agent that collects and forwards data.

- Indexers: Indexers handle parsing, indexing, and storage of data.

- Search Heads (SH): The Search Heads execute search queries and render results.

- Deployment Server (DS): The Deployment Server distributes configurations and applications to forwarders.

- License Manager: The License Manager monitors data ingestion volume to enforce licensing terms and ensure seamless operation.

Data Flow in Splunk

Below is a better understanding of you and of the architecture illustrated by the data flow.

Step 1 – Forwarders send data to indexers. This process is known as ingestion.

Step 2 – In the indexers, events are broken down, and metadata is extracted through the parsing process.

Step 3 – Data is stored in a searchable format. It’s called indexing.

Step 4 – Queries are run through the search head. It’s called searching.

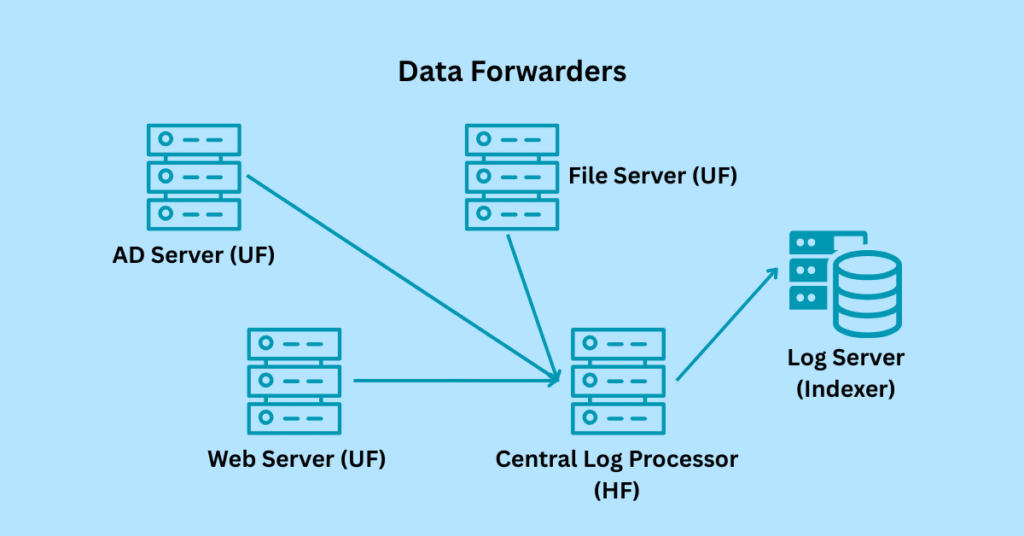

Forwarders Explained

Forwarders are Splunk agents that collect data from various sources and send it to the indexers. So, what are forwarders really in the system?

Forwarders can be any active server within the organization, such as an Active Directory (AD) Server, File Server, Web Server, or central log processor.

Forwarders are two types:

- Universal Forwarder (UF): A Universal Forwarder (UF) is a lightweight agent installed on source systems. It sends raw data to indexers and uses very minimal resources.

- Heavy Forwarder (HF): An HF can parse, filter, and transform data before it’s sent to the indexer. This uses high resources to work.

Indexers

The indexer receives data from forwarders, indexes it, and stores the data in the appropriate buckets.

The most crucial tasks are parsing and storing data, which are performed by the indexer.

You may have a few questions, just as I had in my mind:

- The question what buckets?

- Where do buckets come from?

- How do I know?

- But the answer is to wait and watch.

There are a few terminology we need to know. Let’s dive in.

- Data Ingestion: The process of collecting data from different servers and forwarders and sending it to the indexer.

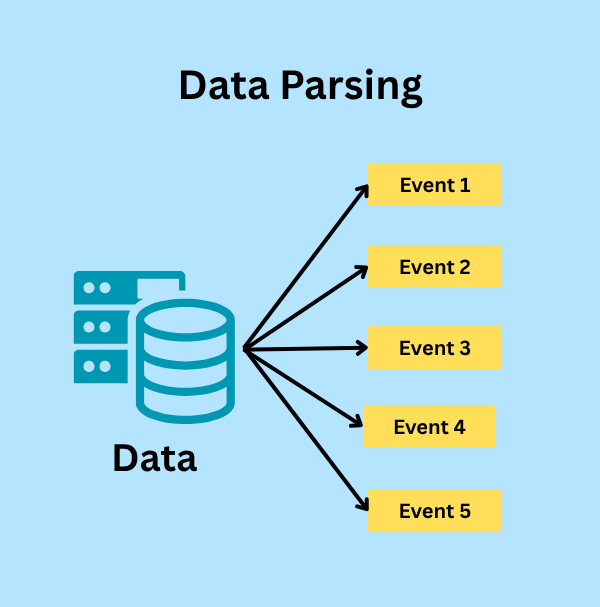

- Parsing: During parsing, the raw data is transformed into individual events.

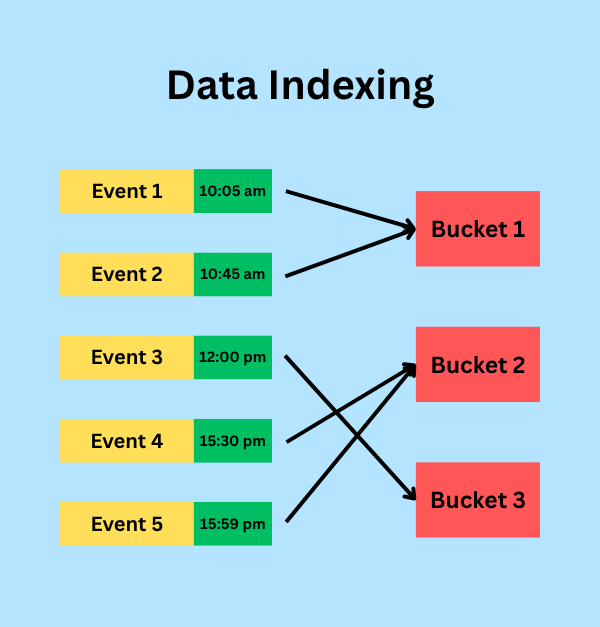

- Indexing: After parsing, all events are stored in buckets, which are categorized by timestamp.

- Response to search: The indexer retrieves data from the appropriate bucket and provides stored events in response to search queries.

- Replication: Replication ensures that data is duplicated across multiple indexers in a clustered environment, providing high availability and fault tolerance.

According to the above image, we can see that when raw data is imported into Splunk, it creates different events.

According to the above image, the events have been categorized by the timestamp of the event that occurred.

Indexes

Storage Locations: Indexes are the storage locations where data is saved after being indexed.

Simple. A little not simple. How? Forget about buckets. The role of buckets starts now.

Indexes are composed of buckets: Hot, Warm, Cold, Frozen, and Thawed.

- Hot bucket: The event is first written to the hot bucket as they are fresh.

- Warm bucket: When the hot bucket is complete, the old events in the hot bucket are transferred to the warm bucket.

- Cold bucket: When the event ages out in the warm bucket, it is moved to the cold bucket.

- Frozen bucket: The oldest events will be archived and put in the frozen bucket.

- Thawed bucket: When an event from a frozen bucket is called out, it is transferred to the thawed bucket as it is archived.

More questions in mind. Who decides to transfer from one bucket to another?

In Splunk, data transitions from a hot bucket to a warm bucket are dependent on system-driven criteria rather than event timestamps. Hot buckets actively store written data and transition to warm conditions when specified criteria are met.

These include exceeding the maximum size, collecting too many events, or triggering circumstances such as indexer restarts. Furthermore, if a new event occurs with a timestamp that falls outside the current hot bucket’s time range, a new hot bucket is created, and the previous one is moved to warm.

This transition is controlled by Splunk’s internal parameters, including maxDataSize, maxHotBuckets, and maxHotSpanSecs, which provide effective resource management and data organization during the indexing process.

Indexer Cluster

An indexer cluster is a group of clusters that collaborate to make data processing faster, more reliable, and scalable. More over completes the CIA Triad for better security.

The Indexer Cluster consists of multiple indexers working together, managed by a cluster manager.

The cluster manager oversees the replication and distribution of data across indexers, ensuring that data is available even if some indexers fail.

A cluster manager manages the cluster, ensuring data replication, and consistency.

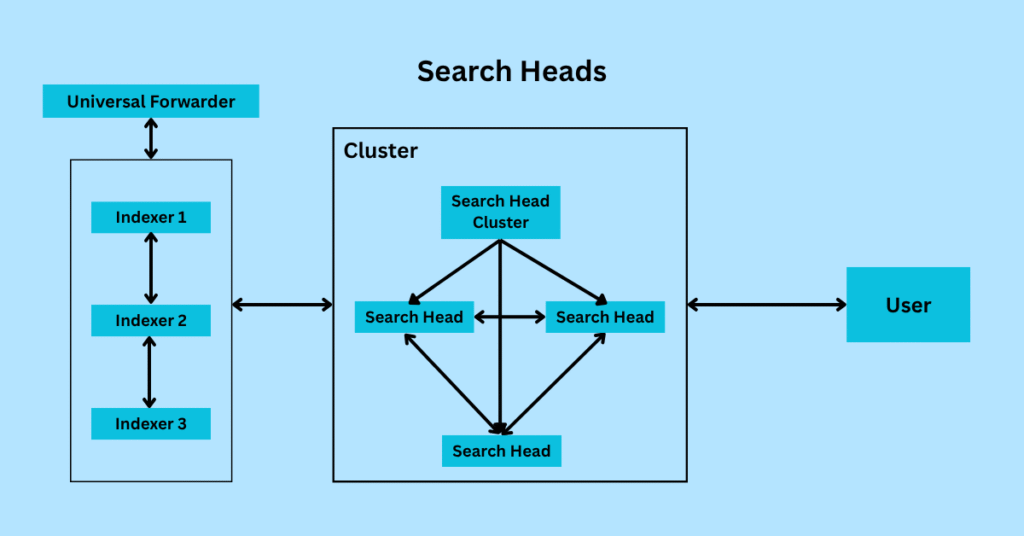

Search Heads and Search Head Cluster

Search Heads

Search heads are responsible for search processing in Splunk. The search head handles user queries, dashboards, and alerts. It connects to indexers to retrieve data. The search query can be through UI or API.

Below is step by step how it retrieves data:

- The user wants to find the data. The user will input different filters in the search bar. With that filter, Splunk will list out different data as output.

- Now, the search query is distributed to the indexers for data retrieval.

- According to the filter pattern, the software consolidates results from indexers and returns the final output to the user.

The search head does not store any data. It just works as a delivery person responsible for transferring data between the indexer and the dashboard (UI).

Search Head Cluster

In the cluster part, there is a captain node that coordinates activities with other nodes. It will distribute the searches across the cluster members. It will ensure load balancing and redundancy.

Deployment Server

The deployment server is another component of Splunk. A central instance is used to manage configuration files and applications across multiple clients.

The Deployment Server is used to manage Universal Forwarders and Heavy Forwarders but not to manage indexers and search heads.

How does the deployment server work? It distributes configuration files (for example, inputs.conf and outputs.conf) to ensure a consistent setup across all forwarders.

The Forwarders are known as deployment clients. The deployment server checks every client every 60 seconds (by default).

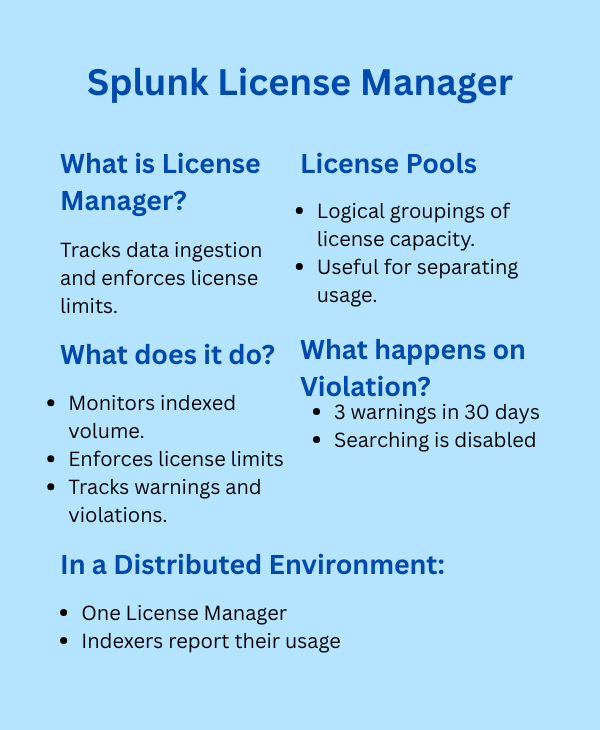

License Manager

A Splunk component is responsible for tracking data ingestion and enforcing license limits. It usually runs on a dedicated Splunk instance, especially in larger environments.

Centralized control for monitoring and managing licensing across distributed deployments.

What does it do?

- Monitors the amount of data indexed per day across all connected indexes.

- Enforces license limits based on your purchased capacity (for example, 10 GB/day, 100 GB/day).

- Tracks:

- Daily indexing volume

- License warnings and violations

- License pool usage

License Pools

- Logical groupings of license capacity.

- You can allocate specific indexers to specific license pools.

- Helpful in separating Prod, Dev, or department-level usage tracking.

What happens to violations?

If you exceed your license volume:

- Splunk issues a license warning.

- After 3 warnings in a rolling 30-day window, the search is disabled (except for internal logs).

Admins must resolve by:

- Reducing ingestion

- Purchasing more license.

- Resetting violations (if allowed).

In a distributed environment

- Only one License Manager per environment.

- All indexers forward their usage data to this License Manager.

- Indexers must be configured to point to it via the licensing settings.

Conclusion

Splunk provides transparency in complicated IT systems. It combines logs, metrics, and events to provide both real-time monitoring and long-term analysis. This software provides the tools and flexibility needed to make data-driven choices, whether it’s for system security or performance optimization.

Resources

Cisco Completes Acquisition of Splunk

For Start-Ups That Aim at Giants, Sorting the Data Cloud Is the Next Big Thing