Hakrawler is a simple and fast tool to scan and crawl web pages in a few minutes. Bug bounty hunters use this tool to crawl all the URLs and easily store them in the text file. The Hakrawler tool was created by Luke Stephens, known as “Hakluke” in the cybersecurity community. He have a YouTube channel called Hakluke. This tool is written in the Go language and gathers all URLs and JavaScript file locations of a website. It will discover every endpoint and asset within a web application or a website.

How does the Hakrawler work?

When the user executes the hakrawler tool and inputs the targeted URL. This tool will extract all the URLs for the webpages of the targeted URL from Way back machine, robots.txt file, and sitemap.xml files. It will discover all the URLs. They can be target URLs, subdomains connected to the target, JavaScript files, forms, and external website URLs. All the URLs will show up in the output.

Features

- Simple to connect to other tools (accepts hostnames from stdin, dumps plain URLs to stdout using the -plain tag).

- Collect URLs from Way back machine, robots.txt, and sitemap.xml files.

- This tool is fast because it is written in the go language.

- Discovers new target domains and subdomains when they are found throughout the crawling process.

- Quickly filter out the output to narrow down the scope.

- The results can be exported in raw HTTP requests format into the files. This will help to perform SQL Injection from tools such as SQLMap.

How to install Hakrawler in Kali Linux?

These steps are the same as installing the hakrawler in any Linux distribution, such as Parrot Security OS, Ubuntu, etc.

The only prerequisite is that the Go programming language should be installed first.

Go to the GitHub page of the tool and copy the code as shown below:

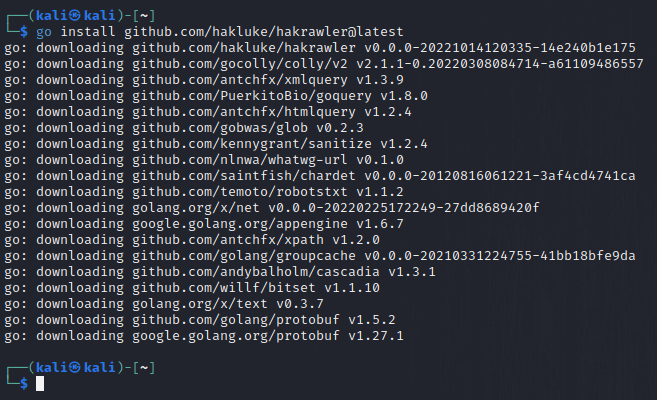

go install github.com/hakluke/hakrawler@latestNow, the tool is installed, as shown in the image below.

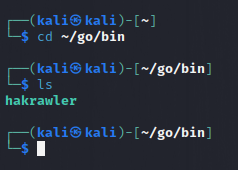

To check, if the tool is installed correctly and working.

We will look the help of the tool. The location where tools written in the Go language are stored:

cd ~/go/bin

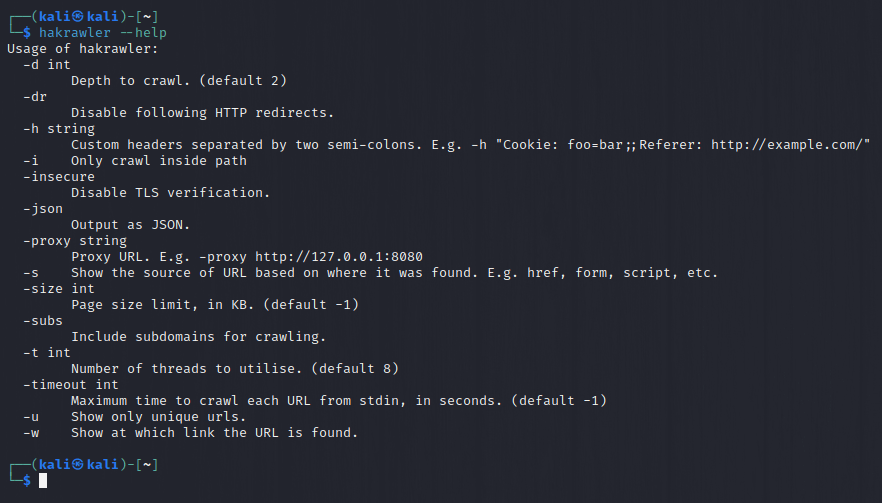

hakrawler --help

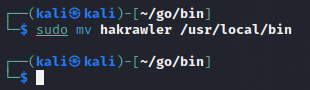

But, we have to set this tool as a global variable. So, we can access with from any directory.

To make it available globally, follow the steps.

- Go to the location of the tool.

- Move the tool to the bin folder.

sudo mv hakrawler /usr/local/bin

Real-World Output: Visualizing hakrawler in Action

- Single URL

echo https://cyberthreatinsider.com | hakrawler

- Multiple URLs

cat urls.txt | hakrawler

- Timeout

Timeout for each line of stdin after 5 seconds:

cat urls.txt | hakrawler -timeout 5

The tool will wait for 5 seconds to retrieve the pages. If it is not scanning other pages, it will then show all output.

- Send all requests through a proxy

cat urls.txt | hakrawler -proxy http://localhost:8080- Include subdomains

echo https://cyberthreatinsider.com | hakrawler -subsReason if the user finds any problem:

A typical issue is that the tool does not return any URLs. This typically occurs when a domain is supplied (https://example.com), but it redirects to a subdomain (https://www.example.com). The subdomain is not in scope; accordingly, no URLs are displayed. To address this, either provide the last URL in the redirect chain or use the ‘-subs’ option to include subdomains.

Output

- JSON-based

echo https://cyberthreatinsider.com | hakrawler -json >> hakrawler_output.txt

- Text-based

echo https://cyberthreatinsider.com | hakrawler >> hakrawler_output.txt

Conclusion

Hakrawler is an excellent tool for extracting subdomains and URLs very fast. This tool was created by YouTuber and bug bounty hunter named Luke Stephens (Hakluke). It can give the result fast as compare to other tools.